Restricting crawler access

This post belongs to a series about search engine optimization (SEO) with Magnolia CMS. Today we look at restricting crawler access.

A robots.txt file restricts crawler access on a site-wide basis. The file is typically placed in the root directory of your site. All legitimate crawlers it check fro the presence of a robots.txt file, and respect the directives it contains. Even if you do not want to restrict access, it is advisable to include a robots.txt file because the absence of one has been known to produce a 404 error, resulting in none of your pages being crawled. This can also be the case if the file is not constructed correctly. Specific crawlers can also be denied access by entering their names or IP addresses in the file.

The following example instructs all crawlers to keep out of the private and temp directories and denies access to a specified crawler.

Preventing crawlers from following undesirable links can help combat automated spam. This method is endorsed by all major engines. Adding a rel="nofollow" attribute to a link allows control on an individual link basis. It can be used to reference external content while not endorsing it, and overcomes the necessity to exclude the crawler from all links on a page.

You can use all the above access restriction methods in Magnolia:

While SEO is mostly about about enticing that crawlers to your content, there are some pages you may not want them to access. These include administrative, password protected, and paid access pages.

A robots.txt file restricts crawler access on a site-wide basis. The file is typically placed in the root directory of your site. All legitimate crawlers it check fro the presence of a robots.txt file, and respect the directives it contains. Even if you do not want to restrict access, it is advisable to include a robots.txt file because the absence of one has been known to produce a 404 error, resulting in none of your pages being crawled. This can also be the case if the file is not constructed correctly. Specific crawlers can also be denied access by entering their names or IP addresses in the file.

The following example instructs all crawlers to keep out of the private and temp directories and denies access to a specified crawler.

User-agent: BadBot # replace 'BadBot' with the actual user-agent of the botIt is also possible to exclude individual pages with a robots meta element in the page HTML header.

Disallow: /private/

Disallow: /temp/

<html>Relevant values for the robots meta element are:

<head>

<title>...</title>

<META NAME="ROBOTS" CONTENT="NOINDEX, NOFOLLOW">

</head>

- NOINDEX prevents the page from being included in the index.

- NOFOLLOW prevents the crawler from following any links on the page. Note that this is different from the link-level rel attribute which prevents the crawler from following an individual link. For anti-spam reasons, it is generally better to use the rel attribute with value NOFOLLOW for individual links.

- NOARCHIVE instructs the crawler not to keep the page in the engine's public index. With Google, this prevents a cached copy of the page from being available in search results.

Preventing crawlers from following undesirable links can help combat automated spam. This method is endorsed by all major engines. Adding a rel="nofollow" attribute to a link allows control on an individual link basis. It can be used to reference external content while not endorsing it, and overcomes the necessity to exclude the crawler from all links on a page.

<a href="http://www.badsite.com" rel="nofollow">

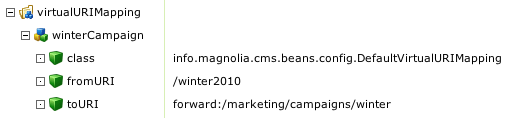

You can use all the above access restriction methods in Magnolia:

- Editing a robots.txt file is vastly simplified in that Magnolia produces clean, human-readable URLs such as http://www.magnolia-cms.com/support-and-services.

- At a single-page level, templates set the robots meta element in the HTML header to "all" by default, allowing full crawler access. Options to change this behavior can be offered to editors with minimal configuration directly in AdminCentral. Customization would involve adding a field in the Page Info dialog definition and a minor tweak to the paragraph template.