Shallow site architecture

This post belongs to a series about search engine optimization (SEO) with Magnolia CMS. Today we look at providing a shallow site architecture.

Site architecture is critical to SEO. Many sites grow organically and their architecture becomes a maze-like, illogical complex. A clear structure provides both the user and web crawler with a positive navigational experience.

As a rule of thumb, the flatter the structure, the better. The higher a page appears in the site structure, the more likely it will be ranked high by a crawler. Crawlers seem to determine the depth of a page by the number of directories present in the URL, giving more relevance, for example, to /books/art than to /books/bestsellers/hardcover/art. A one or two layer limit is recommended, but for larger sites this is not always feasible.

It is also important to maintain consistency throughout your site. All pages should follow the same format, and once established, you should endeavor to stick to the URL guidelines. Where possible, avoid the use of sub-domains as crawlers may view them as separate from your main domain.

Magnolia stores content in a hierarchical content repository. Editors see the hierarchy as a tree where each page belongs to a branch and has a parent node:

Organizing a site as a hierarchy enforces consistent structure. Pages and branches can be rearranged via drag-and-drop.

Magnolia allows you to manage an unlimited number of sites in AdminCentral. Each site has its own site tree, to keep the hierarchy shallow. You can map unique domain names to each site, creating microsites for campaigns, for example. To a crawler, these appear either as unique sites (www.winter2010.com) or as parts of your domain (winter2010.example.com).

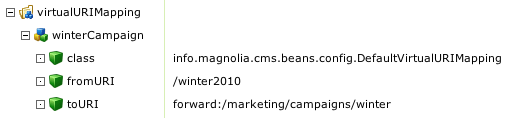

Sometimes it is difficult to avoid deeper structures. For example, you may want to create a campaign inside your main site at /example/marketing/campaigns/winter2010. Adhering to the shallow hierarchy principle is possible even in this case. Magnolia allows you to shorten the URL with URI mapping. Visitors and crawlers access the campaign site at www.example.com/winter2010 while the content is served from the true location deeper in the hierarchy.

Site architecture is critical to SEO. Many sites grow organically and their architecture becomes a maze-like, illogical complex. A clear structure provides both the user and web crawler with a positive navigational experience.

As a rule of thumb, the flatter the structure, the better. The higher a page appears in the site structure, the more likely it will be ranked high by a crawler. Crawlers seem to determine the depth of a page by the number of directories present in the URL, giving more relevance, for example, to /books/art than to /books/bestsellers/hardcover/art. A one or two layer limit is recommended, but for larger sites this is not always feasible.

It is also important to maintain consistency throughout your site. All pages should follow the same format, and once established, you should endeavor to stick to the URL guidelines. Where possible, avoid the use of sub-domains as crawlers may view them as separate from your main domain.

Magnolia stores content in a hierarchical content repository. Editors see the hierarchy as a tree where each page belongs to a branch and has a parent node:

Organizing a site as a hierarchy enforces consistent structure. Pages and branches can be rearranged via drag-and-drop.

Magnolia allows you to manage an unlimited number of sites in AdminCentral. Each site has its own site tree, to keep the hierarchy shallow. You can map unique domain names to each site, creating microsites for campaigns, for example. To a crawler, these appear either as unique sites (www.winter2010.com) or as parts of your domain (winter2010.example.com).

Sometimes it is difficult to avoid deeper structures. For example, you may want to create a campaign inside your main site at /example/marketing/campaigns/winter2010. Adhering to the shallow hierarchy principle is possible even in this case. Magnolia allows you to shorten the URL with URI mapping. Visitors and crawlers access the campaign site at www.example.com/winter2010 while the content is served from the true location deeper in the hierarchy.